[Linux]

亚马逊k8s开局系列-基础组件部署

发布于:2021-06-30 16:28

|

阅读数:475

|

评论:0

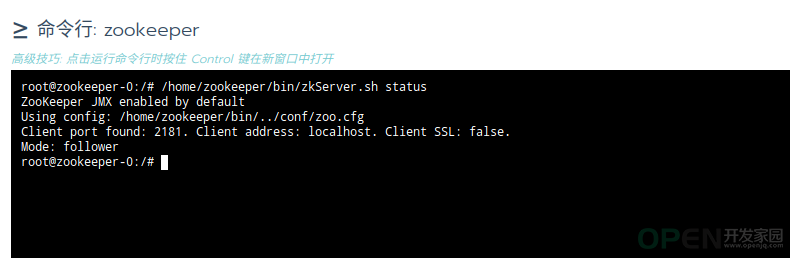

介绍: zookeeper:

部署zookeeper:

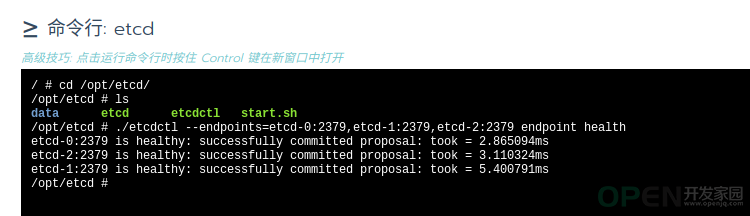

etcd:

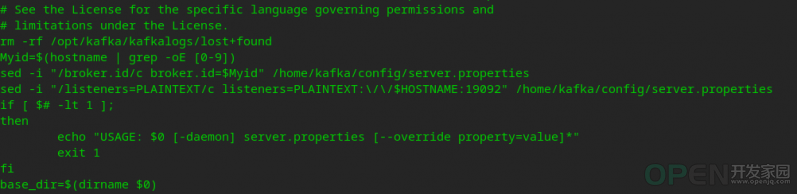

kafka部署: Deployment和StatefulSet

免责声明:

1. 本站所有资源来自网络搜集或用户上传,仅作为参考不担保其准确性!

2. 本站内容仅供学习和交流使用,版权归原作者所有!© 查看更多

3. 如有内容侵害到您,请联系我们尽快删除,邮箱:kf@codeae.com

![]() 服务系统

发布于:2021-06-30 16:28

|

阅读数:475

|

评论:0

服务系统

发布于:2021-06-30 16:28

|

阅读数:475

|

评论:0

QQ好友和群

QQ好友和群 QQ空间

QQ空间