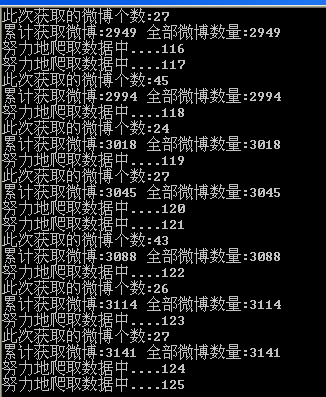

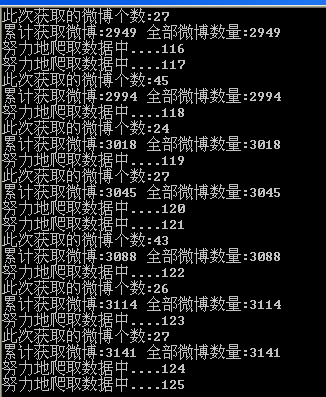

为了练习python,于是写了个代码实现实时获取腾讯微博广播大厅的最新微博数据,每条查询结果以json的格式保存在文件当中承接前一篇微博http://loma1990.blog.51cto.com/6082839/1308205主要在createApiCaller()函数里面添加appkey open_id access_token已经实测 获取了280多万条微博数据结果如下:

代码如下:

代码如下:#! /usr/bin/env pythoncoding=utf-8Author:loma持续获取腾讯微博广播大厅的微博数据

'''

@author loma

qq:124172231 mail:lwsbox@qq.com

* Copyright (c) 2013, loma All Rights Reserved.

'''

import urllib2

import urllib

import webbrowser

import urlparse

import os

import time

import json

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

class ApiManager:

#获取使用api的公共参数

def getPublicParams(self,appKey,access_token,open_id):

params = {};

params['oauth_consumer_key'] = appKey;

params['access_token'] = access_token;

params['openid'] = open_id;

params['oauth_version'] = '2.a';

params['scope'] = 'all';

return params;

#通过Ie打开授权页面

def OAuth2(self,appKey,redirect_url,response_type = 'token'):

format = 'https://open.t.qq.com/cgi-bin/oauth2/authorize?client_id=%s&response_type=%s&redirect_uri=%s';

url = format%(appKey,response_type,redirect_url);

webbrowser.open_new_tab(url);

#从得到的URl解析出access_token和client_id到params字典中

def decodeUrl(self,urlStr):

urlStr = urlStr.replace('#','?');

result=urlparse.urlparse(urlStr);

params=urlparse.parse_qs(result.query,True);

for a in params:

print params[a]

return params;

#调用腾讯微博开放平台的api

def doRequest(self,appKey,open_id,access_token,apiStr,params):

apiHead = 'http://open.t.qq.com/api/';

requestParams = self.getPublicParams(appKey,access_token,open_id);

requestParams.update(params);

url = apiHead + apiStr + "?" + urllib.urlencode(requestParams);

data = urllib2.urlopen(url);

return data;

ApiCaller负责API的调用

class ApiCaller:def __init__(self):

self.count = 0

self.apiManager = ApiManager()

self.Callers = {}

#增加调用者

def addCaller(self,appKey,open_id,access_token):

self.Callers[len(self.Callers) + 1] = {"appKey":appKey,"open_id":open_id,"access_token":access_token}

self.count = self.count + 1

#调用api

def callAPI(self,apiStr,params):

if self.count <= 0 or self.count > len(self.Callers):

return False,None

caller = self.Callers[self.count]

if self.count > 1:

self.count = self.count % (len(self.Callers) -1) + 1

while True:

try:

data = self.apiManager.doRequest(caller["appKey"],caller["open_id"],caller["access_token"],apiStr,params)

break;

except:

print (u"10s后重试连接").encode('gbk')

time.sleep(10);

continue;

return True,data

class Spider:

def __init__(self,callers):self.totalNum = 0 #用来保存微博数量

self.caller = callers

self.idSet = set() #用来保存所有微博的id

self.idList = [] #用来保存一次json里面的微博id

self.isStop = False #用来结束spiding的循环的

self.tag = 0

#不定时爬取微博数据

def spiding(self,dataFile,sleepTime,apiStr,apiParams):

self.initIdSet(dataFile)

self.isStop = False

weiboSum = 0

while True:

print (u"努力地爬取数据中....%d"%(self.tag)).encode('gbk')

self.tag = self.tag + 1

#调用API获取微博数据

re,data = self.caller.callAPI(apiStr,apiParams)

time.sleep(sleepTime)

data = data.read()

try:

js = json.loads(data)

except:

print 'loads failed'

continue

if js['data'] == None:

apiParams['pos'] = 0

continue

info = js['data']['info']

pos = js['data']['pos']

apiParams['pos'] = pos

#保存json里所有微博的id,用于判断是否有重复的微博

self.idList = []

for weibo in info:

self.idList.insert(0,weibo['id'])

#过滤掉重复的微博

js['data']['info'] = filter(lambda x:x['id'] not in self.idSet and self.idList.count(x['id']) == 1,info)

info = js['data']['info']

print (u"此次获取的微博个数:%d"%(len(info))).encode('gbk')

weiboSum = weiboSum + len(info)

self.totalNum = self.totalNum + len(info)

print (u"累计获取微博:%d 全部微博数量:%d"%(weiboSum,self.totalNum)).encode('gbk')

for weibo in info:

self.idSet.add(weibo['id'])

if self.isStop == True:

break

jsStr = json.dumps(js,ensure_ascii=False,encoding='utf8')

# print jsStr.decode('utf8').encode('gbk')

file = open(dataFile,'a')

file.write(jsStr + '\n')

file.close()

def stopSpiding(self):

self.isStop = True

def initIdSet(self,dataFile):

try:

file = open(dataFile,"r")

except:

return

for line in file:

js = json.loads(line)

info = js['data']['info']

for weibo in info:

id = weibo['id']

#print weibo['text'].decode('utf8').encode('gbk')

self.idSet.add(id)

self.totalNum = self.totalNum + 1

file.close()

授权调用

def doOAuth():

#需要设置的地方

appkey = '801348303';

redirect_url = 'http://loma1990.blog.51cto.com';#实例化

manager = ApiManager();

#调用授权页面

manager.OAuth2(appkey,redirect_url);

#等待用户输入授权后跳转到的页面里的url

url = raw_input('Input the url');

#提取access_token和openid

params = manager.decodeUrl(url);

print params['openid']

print ""%s","%s","%s""%(appkey,params['openid'],params['access_token'])

#api参数设置

apiParams = {'format':'json','pos':'0','reqnum':'100'};

#调用api获取数据

data = manager.doRequest(appkey,params['openid'],params['access_token'],'statuses/public_timeline',apiParams);

#将获取的数据保存到指定文件中

file = open("d:\\weibo.txt","wb");

file.write(data.read());

file.close();

#打印数据

print data.read();

ApiCaller创建

def createApiCaller():

callers = ApiCaller()

#要在这边调用addCaller增加你的appKey,open_id,access_token 然后直接运行就可以了

return callers

doOAuth()

callers = createApiCaller()

api参数设置

apiParams = {'format':'json','pos':0,'reqnum':100};

spider = Spider(callers)

spider.spiding('d:\\weiboBase.txt',5,'statuses/public_timeline',apiParams)

|

![]() 开发技术

发布于:2021-06-24 09:38

|

阅读数:405

|

评论:0

开发技术

发布于:2021-06-24 09:38

|

阅读数:405

|

评论:0

QQ好友和群

QQ好友和群 QQ空间

QQ空间