1.爬虫是什么?

引言:爬虫?什么是爬虫?

爬虫的定义:模拟浏览器发送请求,获取响应。

书面化爬虫简介!!!点我哦!!!1.数据采集

抓取微博评论(机器学习舆情监控)

抓取招聘网站的招聘信息(数据分析,挖掘)

新浪滚动新闻

百度新闻网站

<span class="token number">2</span><span class="token punctuation">.</span>软件测试

爬虫之自动化测试

虫师

<span class="token number">3.12306</span>抢票

<span class="token number">4</span><span class="token punctuation">.</span>网站上的投票

<span class="token number">5</span><span class="token punctuation">.</span>网络安全

短信轰炸

web漏洞扫描

根据被爬取的数量不同,分类:

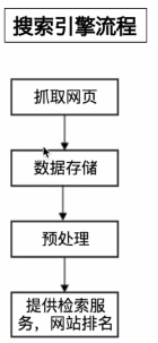

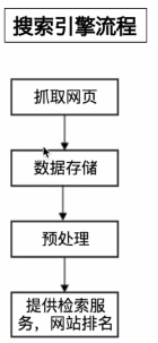

通用爬虫:通常指搜索引擎的爬虫 具有很大的局限性:大部分内容没有用,不同搜索目的,返回的内容相同!

(通用爬虫是搜索引擎抓取系统 (baidu,goole,yahoo等)的重要组成部分 。

主要目的是将互联网的网页下载到本地 ,形成一个互联网内容的镜像备份。)

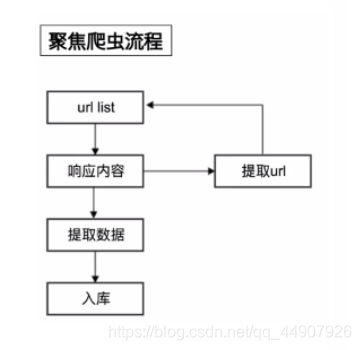

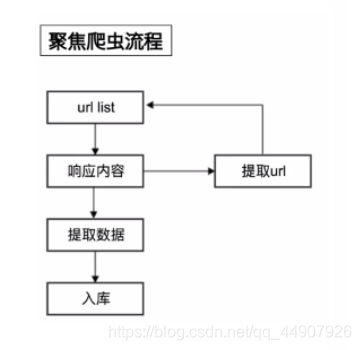

聚焦爬虫:针对特定网站的爬虫

(是面向特定主题需求的一种网络爬虫程序 ,它与通用搜索引擎爬虫的区别在于 :

聚焦爬虫在实施页面抓取时会对内容进行处理筛选,尽量保证只抓取与需求相关的网页信息)

根据是否获取数据为目的,分类:

功能性爬虫,比如,投票,点赞

数据增量爬虫,比如招聘信息

根据url地址和对应的页面内容是否改变,数据增量爬虫分类:

基于url地址变化,内容也随之变化的数据增量爬虫

url地址不变,内容变化的数据增量爬虫

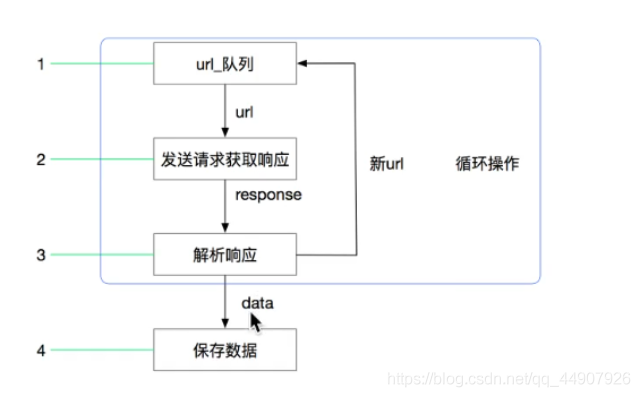

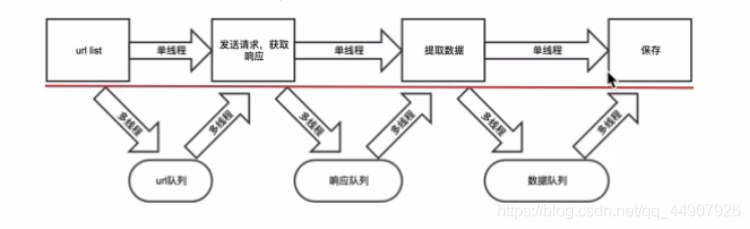

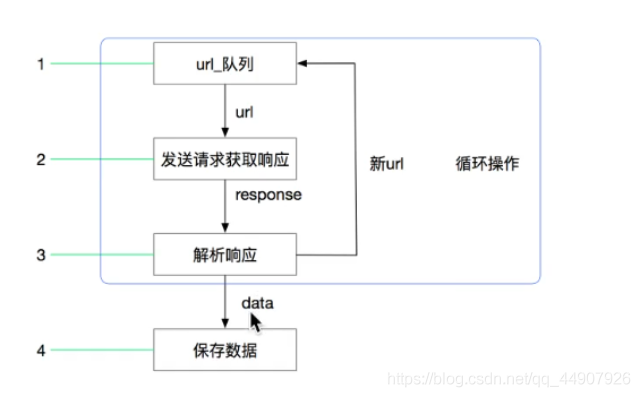

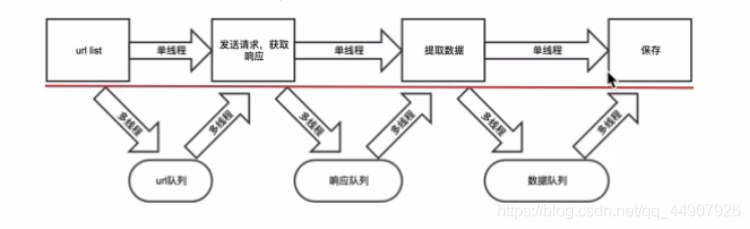

流程:url——>发送请求,获取响应——>提取数据——>保存数据

发送请求,获取响应——>提取url地址,继续请求

- robots协议:

robots协议:网站通过robots协议,告诉我们搜索引擎哪些页面可以抓取,哪些页面不能抓取,但它仅仅是道德层面上的约束。

- http的重点请求头:

http的重点请求头:

user-agent:告诉对方服务器是什么客户端正在请求资源,爬虫中模拟浏览器非常重要的一个手段

爬虫中通过把user-agent设置为浏览器的ua,能够达到模拟浏览器的效果。

cookie:获取登录之后,才能够访问的资源

常见的请求头,响应头

Content-Type

Host(主机和端口号)

Upgrade-Insecure-Rwquests(升级为https请求)

User-Agent(浏览器名称)

Referer(页面跳转处)

Cookie

Authorization(用于表示http协议中需要认证资源的认证信息,如jwt认证)

浏览器发送http请求的过程:

1.域名解析 -->

2.发起TCP的3次握手 -->

3.建立TCP连接后发起http请求 -->

4.服务器响应http请求,浏览器得到html代码 -->

5.浏览器解析html代码,并请求html代码中的资源(如js、css、图片等) -->

6.浏览器对页面进行渲染呈现给用户.

注意:

在网页的检查里的Network->Name->Request Headers view parsed下

的Connection:keep-alive保持常连接,就不用频繁的三次握手和三次分手!

浏览器获取的内容(elements的内容)包含:url地址对应的响应+js+css+jpg

爬虫会获取:url地址对应的响应

爬虫获取的内容和elements内容不一样,进行数据提取的时候,需要根据url地址对应的响应为准

1.http超文本传输协议 协议默认端口80

<span class="token number">2.</span>https也是超文本传输协议 协议默认端口<span class="token number">443</span>

http因为是明文传输,而https是密文传输,所以HTTPS比http更安全,但是性能低,因为解密需要消耗时间!

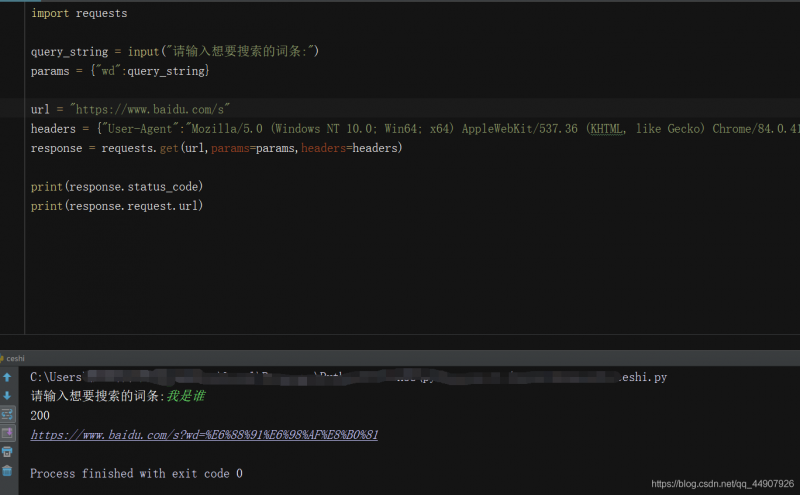

2.正式进入爬虫—requests模块的使用!

1.url地址解码的方法:

requests.utils.unquote(url)

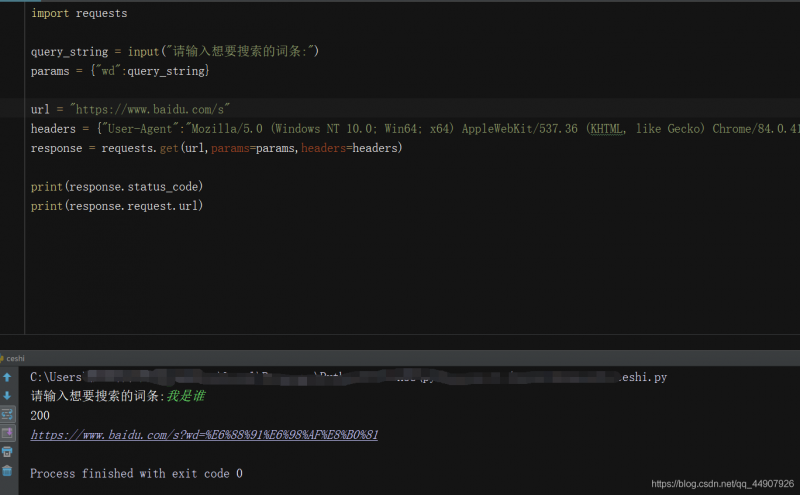

2.requests中headers如何使用:

headers = {"User-Agent":""}

requests.get(url,headers=headers)

3.requests中如何发送post请求:

data = {"浏览器中的form data"}

requests.post(url,data=data)

概念:爬虫就是模拟浏览器发送网络请求,获取请求响应

4.requests如何发送请求和获取响应:

response = requests.get(url)

response.text ---> str(获取的数据是字符串类型)

response.encoding="utf-8"(乱码需要解码,修改编码方式)

response.content ---> bytes(获取的数据是字节类型)

response.content.decode()(字节需要编码)

5.响应:

response.text str类型,

response.content 获取内容,字节类型,需要decode编码

response.status_code 获取状态码

response.request.headers 响应对应的请求头

response.headers 响应头

response.request.url 请求url地址

response.url 响应url地址

response.request._cookie 响应对应请求的cookie;返回cookiejar类型

response.cookies 响应cookie(经过了set-cookie动作,返回cookiejar类型)

response.json() 自动将json字符串类型的响应内容转换为python对象(dict 或者 list)

6.发送带参数的请求:

params = {"":""}

url_temp = "不完整的URL地址"

requests.get(url_temp,params=params)

例:wd为百度词条搜索url的参数key值

3.实战:利用requests库进行百度贴吧的爬取!import os

import requests

'''

为了构造正确的url!!!

进入百度贴吧进行测试,任意搜索一个信息,通过不同页更换,观察url找寻规律:

https://tieba.baidu.com/f?kw=美食&ie=utf-8&pn=0

https://tieba.baidu.com/f?kw=美食&ie=utf-8&pn=50

https://tieba.baidu.com/f?kw=美食&ie=utf-8&pn=100

https://tieba.baidu.com/f?kw=美食&ie=utf-8&pn=150

'''

class TiebaSpider:

def __init__(self,tieba_name):

self<span class="token punctuation">.</span>tieba_name <span class="token operator">=</span> tieba_name

self<span class="token punctuation">.</span>url_temp <span class="token operator">=</span> <span class="token string">"https://tieba.baidu.com/f?kw="</span><span class="token operator">+</span>tieba_name<span class="token operator">+</span><span class="token string">"&ie=utf-8&pn={}"</span>

self<span class="token punctuation">.</span>headers <span class="token operator">=</span> <span class="token punctuation">{</span><span class="token string">"User-Agent"</span><span class="token punctuation">:</span><span class="token string">"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36"</span><span class="token punctuation">}</span>

# 构造url列表 def get_url_list(self):<span class="token keyword">return</span> <span class="token punctuation">[</span>self<span class="token punctuation">.</span>url_temp<span class="token punctuation">.</span><span class="token builtin">format</span><span class="token punctuation">(</span>i<span class="token operator">*</span><span class="token number">50</span><span class="token punctuation">)</span> <span class="token keyword">for</span> i <span class="token keyword">in</span> <span class="token builtin">range</span><span class="token punctuation">(</span><span class="token number">5</span><span class="token punctuation">)</span><span class="token punctuation">]</span>

# 发送请求,获取响应 def parse_url(self,url):response <span class="token operator">=</span> requests<span class="token punctuation">.</span>get<span class="token punctuation">(</span>url<span class="token punctuation">,</span>headers<span class="token operator">=</span>self<span class="token punctuation">.</span>headers<span class="token punctuation">)</span>

<span class="token keyword">return</span> response<span class="token punctuation">.</span>content<span class="token punctuation">.</span>decode<span class="token punctuation">(</span><span class="token punctuation">)</span>

# 保存 def save_html_str(self, html_str, page_num):file_path <span class="token operator">=</span> <span class="token string">"{}_第{}页.html"</span><span class="token punctuation">.</span><span class="token builtin">format</span><span class="token punctuation">(</span>self<span class="token punctuation">.</span>tieba_name<span class="token punctuation">,</span> page_num<span class="token punctuation">)</span>

<span class="token builtin">dir</span> <span class="token operator">=</span> <span class="token string">'ceshi'</span>

<span class="token keyword">if</span> <span class="token operator">not</span> os<span class="token punctuation">.</span>path<span class="token punctuation">.</span>exists<span class="token punctuation">(</span><span class="token builtin">dir</span><span class="token punctuation">)</span><span class="token punctuation">:</span>

os<span class="token punctuation">.</span>mkdir<span class="token punctuation">(</span><span class="token builtin">dir</span><span class="token punctuation">)</span>

file_path <span class="token operator">=</span> <span class="token builtin">dir</span> <span class="token operator">+</span> <span class="token string">'/'</span> <span class="token operator">+</span> file_path

<span class="token keyword">with</span> <span class="token builtin">open</span><span class="token punctuation">(</span>file_path<span class="token punctuation">,</span> <span class="token string">"w"</span><span class="token punctuation">,</span> encoding<span class="token operator">=</span><span class="token string">'utf-8'</span><span class="token punctuation">)</span> <span class="token keyword">as</span> f<span class="token punctuation">:</span>

f<span class="token punctuation">.</span>write<span class="token punctuation">(</span>html_str<span class="token punctuation">)</span>

<span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">"保存成功!"</span><span class="token punctuation">)</span>

# 实现主要逻辑 def run(self):<span class="token comment"># 构造url列表</span>

url_list <span class="token operator">=</span> self<span class="token punctuation">.</span>get_url_list<span class="token punctuation">(</span><span class="token punctuation">)</span>

<span class="token comment"># 发送请求,获取响应</span>

<span class="token keyword">for</span> url <span class="token keyword">in</span> url_list<span class="token punctuation">:</span>

html_str <span class="token operator">=</span> self<span class="token punctuation">.</span>parse_url<span class="token punctuation">(</span>url<span class="token punctuation">)</span>

<span class="token comment"># 保存</span>

page_num <span class="token operator">=</span> url_list<span class="token punctuation">.</span>index<span class="token punctuation">(</span>url<span class="token punctuation">)</span><span class="token operator">+</span><span class="token number">1</span>

self<span class="token punctuation">.</span>save_html_str<span class="token punctuation">(</span>html_str<span class="token punctuation">,</span> page_num<span class="token punctuation">)</span>

if __name__ == '__main__': name_date = input("请输入你想知道的内容:") tieba_spider = TiebaSpider(name_date) tieba_spider.run()本代码是爬取指定搜索内容获取到的html源码头5页!

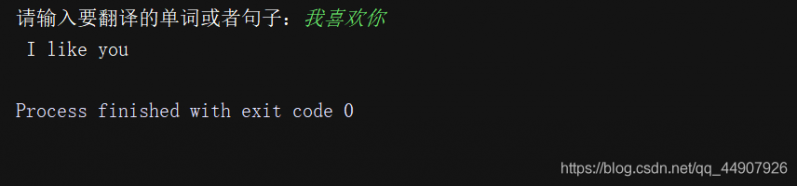

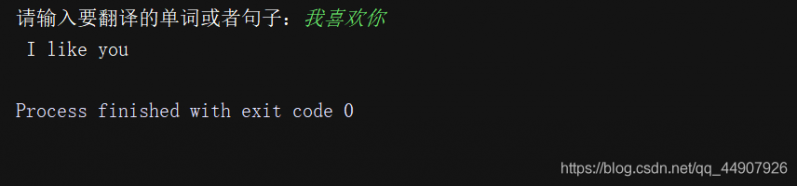

4.requests模块发送post请求

4.requests模块发送post请求

应用场景:登录注册

需要传输大文本内容的时候(post请求对长度没有要求)

- 用法:

response = requests.post(“http://www.baidu.com”, data = data, headers = headers)

data的形式:字典

data的来源:固定值,抓包(form data)

实战:汉译英爬虫实现!import requests

import json

class King(object):

def __init__(self, word):

self<span class="token punctuation">.</span>url <span class="token operator">=</span> <span class="token string">'http://fy.iciba.com/ajax.php?a=fy'</span>

self<span class="token punctuation">.</span>headers <span class="token operator">=</span> <span class="token punctuation">{</span><span class="token string">'User-Agent'</span><span class="token punctuation">:</span> <span class="token string">'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.79 Safari/537.36'</span><span class="token punctuation">}</span>

self<span class="token punctuation">.</span>data <span class="token operator">=</span> <span class="token punctuation">{</span>

<span class="token string">'f'</span><span class="token punctuation">:</span> <span class="token string">'auto'</span><span class="token punctuation">,</span>

<span class="token string">'t'</span><span class="token punctuation">:</span> <span class="token string">'auto'</span><span class="token punctuation">,</span>

<span class="token comment"># 'w': '字典'</span>

<span class="token string">'w'</span><span class="token punctuation">:</span> word

<span class="token punctuation">}</span>

<span class="token keyword">def</span> <span class="token function">post_data</span><span class="token punctuation">(</span>self<span class="token punctuation">)</span><span class="token punctuation">:</span>

response <span class="token operator">=</span> requests<span class="token punctuation">.</span>post<span class="token punctuation">(</span>self<span class="token punctuation">.</span>url<span class="token punctuation">,</span> data<span class="token operator">=</span>self<span class="token punctuation">.</span>data<span class="token punctuation">,</span> headers<span class="token operator">=</span>self<span class="token punctuation">.</span>headers<span class="token punctuation">)</span>

<span class="token keyword">return</span> response<span class="token punctuation">.</span>content

def parse_data(self,data):<span class="token comment"># 将json字符串转化为字典</span>

dict_data <span class="token operator">=</span> json<span class="token punctuation">.</span>loads<span class="token punctuation">(</span>data<span class="token punctuation">)</span>

<span class="token keyword">try</span><span class="token punctuation">:</span>

<span class="token keyword">print</span><span class="token punctuation">(</span>dict_data<span class="token punctuation">[</span><span class="token string">'content'</span><span class="token punctuation">]</span><span class="token punctuation">[</span><span class="token string">'out'</span><span class="token punctuation">]</span><span class="token punctuation">)</span>

<span class="token keyword">except</span><span class="token punctuation">:</span>

<span class="token keyword">print</span><span class="token punctuation">(</span>dict_data<span class="token punctuation">[</span><span class="token string">'content'</span><span class="token punctuation">]</span><span class="token punctuation">[</span><span class="token string">'word_mean'</span><span class="token punctuation">]</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span><span class="token punctuation">)</span>

def run(self):<span class="token comment"># 编写爬虫的逻辑</span>

<span class="token comment"># url</span>

<span class="token comment"># headers</span>

<span class="token comment"># data 字典</span>

<span class="token comment"># 发送请求获取响应</span>

response <span class="token operator">=</span> self<span class="token punctuation">.</span>post_data<span class="token punctuation">(</span><span class="token punctuation">)</span>

<span class="token comment"># print(response)</span>

<span class="token comment"># 数据分析</span>

<span class="token comment"># 获取翻译后的结果</span>

self<span class="token punctuation">.</span>parse_data<span class="token punctuation">(</span>response<span class="token punctuation">)</span>

if __name__ == '__main__': word = input('请输入要翻译的单词或者句子:') king = King(word) king.run()

5.使用代理:

5.使用代理:

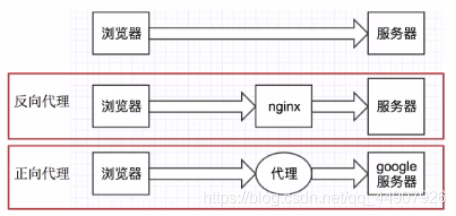

- 什么是代理?

什么是代理?

代理IP是一个ip ,指的是一个代理服务器。

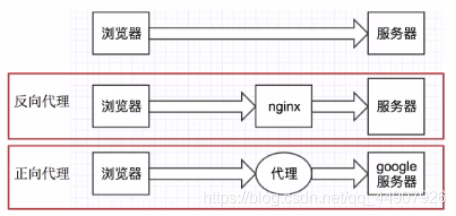

- 要晓得正向代理和反向代理是啥?

不知道服务器的地址做为判断标准:知道就是正向代理,不知道就是反向代理。

- 代理ip的分类

匿名度:

透明代理 :目标服务器可以通过代理找到你的ip

匿名代理 :两者之间

高匿代理 :在爬虫中经常使用,目标服务器无法获取你的ip

协议:

根据网站使用的协议不同,需要使用响应的协议代理服务,

http代理:目标的url为http协议

https代理:目标url为https协议

socks代理 :只是简单的传递数据包,不关心是何种协议,比http和HTTPS代理消耗小, 可以转发http和https的请求

- 为何使用代理?

(1)让服务器以为不是同一个客户端在请求

(2)防止我们的真实地址被泄露,防止被追究

- 用法:

response = requeses.get("http://www.baidu.com, proxies = proxies")

proxies的形式:字典

例如:

proxies = {

<span class="token string">"http"</span><span class="token punctuation">:</span> <span class="token string">"http://192.168.13.24:8000"</span><span class="token punctuation">,</span>

<span class="token string">"https"</span><span class="token punctuation">:</span> <span class="token string">"http://192.168.13.24:8000"</span>

<span class="token punctuation">}</span>

案例:

import requests

url = “http://www.baidu.com”

proxies = {

‘http’:‘http://111.222.11.123:12222’,

}

headers = {“User-Agent”:“Mozilla/5.0 (Windows NT 10.0;.36 (KHTML, like Gecko) Chrome/84.0.414.36”}

response = requests.get(url,proxies=proxies,headers=headers,timeout=5)

print(response.text)

6.cookie与session

(1)requests获取cookie

requests.utils.dict_from_cookiejar:把cookiejar对象转化为字典。

举例:

import requests

url = 'http://www.baidu.com'

response = requests.get(url)

cookie = requests.utils.dict_from_cookiejar(response.cookies)

print(cookie)

cookie是一个字典:

{'ucloud_zz': '1'}

(2)requests处理cookie请求

1.cookie字符串放在headers中:

headers <span class="token operator">=</span> <span class="token punctuation">{</span>

<span class="token string">'User-Agent'</span><span class="token punctuation">:</span> <span class="token string">'xxxxx'</span><span class="token punctuation">,</span>

<span class="token string">'Cookie'</span><span class="token punctuation">:</span> <span class="token string">'xxxxx'</span>

<span class="token punctuation">}</span>

<span class="token number">2</span><span class="token punctuation">.</span>把cookie字典交给requests请求方法的cookies:

cookies <span class="token operator">=</span> <span class="token punctuation">{</span>‘cookie的name’:‘cookie的value<span class="token punctuation">}</span>

使用方法:

requests<span class="token punctuation">.</span>get<span class="token punctuation">(</span>url<span class="token punctuation">,</span> headers<span class="token punctuation">,</span> cookies<span class="token operator">=</span>cookie_dict<span class="token punctuation">)</span>

(3)requests处理cookie请求之session

requests提供了session类,用来实现客户端和服务的的会话保持!

- 会话(状态)保持:

保存cookie

实现和服务端的长连接

- 使用方法:

session = requests.session()

response = session.get(url, headers)

session在请求一个网站后,对方服务器设置在本地的cookie会保存在session中,下一次在使用session请求对方的服务器的时候,会带上前一次的cookie!

- 实战:人人网!

import requests

# 1.实例化session

session = requests.Session()

# 2. 使用session发送post请求,对方服务器会把cookie设置在session中

headers = {"User-Agent":"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US) AppleWebKit/532.2 (KHTML, like Gecko) Chrome/4.0.222.3 "}

post_url = "http://www.renren.com/PLogin.do"

post_data = {"email":"自己的账号","password":"自己的密码"}

session.post(post_url,data=post_data,headers=headers)

# 3.请求个人主页,会带上之前的cookie,能够请求成功

profile_url = "http://www.renren.com/自己进自己主页会有的/profile"

response = session.get(profile_url,headers=headers)

with open("renren.html", "w", encoding="utf-8") as f:

f.write(response.content.decode())

(4)requests模拟登陆的三种方法

1.session:

<space8e4404ea37366a40f543ce675b90e48bCode 12>

<p>2.cookie方法在headers中</p>

<p>3.cookie传递给cookies参数:</p>

<space8e4404ea37366a40f543ce675b90e48bCode 13>

- requests处理ssl证书:

ssl证书不安全导致爬虫程序报错,返回ssl.CertificateError(证书错误)

解决办法:response=requests.get(url, verify = False)

verify = False 代表不在验证证书,默认是True验证

- requests与超时参数(检测IP代理池):

response=requests.get(url, timeout=3)

timeout=3,代表保证在3秒钟内返回响应,否则报错

(5)retrying模块(刷新)与超时参数timeout

1.使用retrying模块提供的retry方法

<p>2.通过装饰器的方式,让被装饰的函数反复执行</p>

<p>3.retry中可以传入参数 stop_max_attempt_number,让函数报错后继续重新执行,达到最大执行次数的上限,如果每次都报错,整个函数报错,如果中间有一个成功,程序继续往后执行</p>

import requests

from retrying import retry

headers = {"User-Agent":"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US) AppleWebKit/532.2 (KHTML, like Gecko) Chrome/4.0.222.3 "}

@retry(stop_max_attempt_number=3) # stop_max_attempt_number=3最大执行3次,还不成功就报错

def _parse_url(url): # 前面加_代表此函数,其他地方不可调用

print(""100)

response = requests.get(url, headers=headers, timeout=3) # timeout=3超时参数,3s内

assert response.status_code == 200 # assert断言,此处断言状态码是200,不是则报错

return response.content.decode()

def parse_url(url):

try:

html_str <span class="token operator">=</span> _parse_url<span class="token punctuation">(</span>url<span class="token punctuation">)</span>

<span class="token keyword">except</span> Exception <span class="token keyword">as</span> e<span class="token punctuation">:</span>

<span class="token keyword">print</span><span class="token punctuation">(</span>e<span class="token punctuation">)</span>

html_str <span class="token operator">=</span> <span class="token boolean">None</span>

<span class="token keyword">return</span> html_str

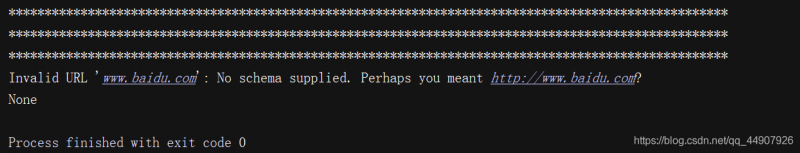

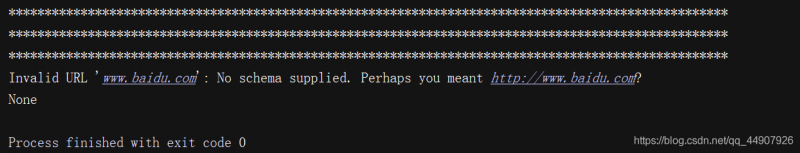

if __name__ == '__main__': # url = "www.baidu.com" 这样是会报错的! url = "http://www.baidu.com" print(parse_url(url))

无法爬取到的情况:url = “www.baidu.com”!

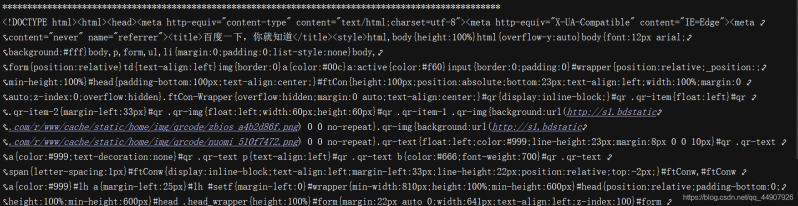

正确爬取到的情况:url = “http://www.baidu.com”!

正确爬取到的情况:url = “http://www.baidu.com”!

7.多线程爬虫

7.多线程爬虫

(1)多线程爬虫

- 思路解析:

在python3中,主线程主进程结束,子线程,子进程不会结束

把子线程设置为守护线程,即主线程结束,子线程结束

t1 = threading.Thread(targe=func, args=(,))

t1.setDaemon(True)

t1.start() # 线程启动

(2)队列模块的使用(将线程放入队列中实现)

from queue import Queue

q = Queue(masize=100)

item = {}

# 不等待,直接放(存),队列满的时候会报错

q.put_nowait(item)

# 放入数据,队列满的时候会等待

q.put(item)

# 不等待,直接取,队列空的时候会报错

q.get_nowait()

# 取出数据,队列空的时候会等待

q.get()

# 获取队列中现存数据的个数

q.qsize()

# 队列中维持了一个计数,计数不为0时候,让主线程阻塞等待,队列计数为0的时候才会继续往后面执行

q.join()

q.task_done() 和get()方法配合,队列计数-1

q.put() 队列计数+1

|

![]() 编程语言

发布于:2021-06-24 10:21

|

阅读数:323

|

评论:0

编程语言

发布于:2021-06-24 10:21

|

阅读数:323

|

评论:0

QQ好友和群

QQ好友和群 QQ空间

QQ空间